Welcome to Startup ROI! I’m Kyle O'Brien, an early stage deep tech investor — alongside Rand Hindi— and community builder based in Paris, France. I write what I see. These days, it’s mostly explorations into various categories of interest including:

The Future of Computing

Blockchain Infrastructure (Privacy & Encryption)

Frontier Health

I enjoy taking complex topics and reducing them into accessible articles and top-notch memes. I also throw bangin’ dinner parties for cool people in tech and venture.

Let’s get started before another GPT model gets released…

AI-nxiety & Unknown Unkowns

Artificial Intelligence is always on my radar. But lately, it’s felt unavoidable, nearly omni-present. In fact, the hubbub surrounding the step changes towards “generally intelligent” machines is producing a new phenomena known as AI-nxiety — fear and uncertainty regarding job security and for some, civilization as we know it as a result of the impending AI revolution.

Anxiety among the general population is understandable. Fear of the unknown is a story as old as time. But it doesn’t help that there is quite vocal and public disagreement among experts in the field as to what these changes represent and the consequences, intended and un-, that lie ahead. Two distinct “sects” have emerged as quasi-authorities in the Twitter-sphere. I use the term sect intentionally for its cultish connotation. We are for the first time in history entering uncharted waters with regard to what exactly is happening within these large language models. As a result, a form of dogmatism is growing on both sides of the continuum, each with valid concerns and an uncomfortable degree of certainty.

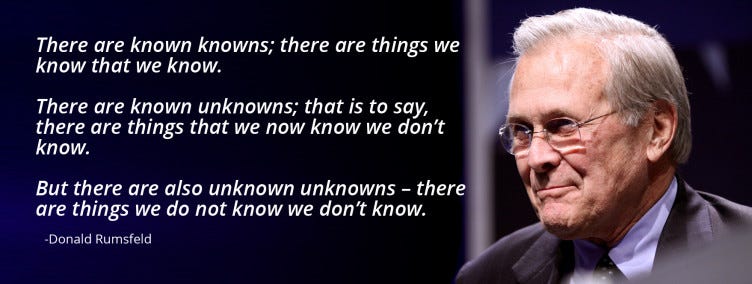

There are things we know for certain, others we know that we don’t know and perhaps some unknown unknowns to borrow from Former US Secretary of Defence Donald Rumsfeld. To better understand the calculus, we’ll turn to a common framework for modeling business on the internet: the smiling curve.

A Page Out of Ben Thompson’s Book

If you haven’t come across Ben Thompson’s Stratechery, you should check it out. I’m what you might call a poor man’s Ben Thompson. He’s the original business blogger that’s had a massive influence on the Substack ecosystem (he basically built his own Substack a decade ago). He uses many common frameworks to illustrate how modern businesses succeed and fail, largely in the context of our internet-native economy and global supply chain. The main takeaway is this: value capture has skewed to the extremes. A classic example this that of the publishing industry in the internet age:

Big publishing houses that used to have an integrated supply chain now leak (read: hemorrhage) value to the extremes. This “unbundling” effect has taken place in gaming, eCommerce, and manufacturing as well. The lesson for startup founders? Aim for the extremes. Be an aggregator (Facebook, Amazon) or a supplier (make the thing: content, valuable product) but don’t be the middleman. If this is interesting to you, definitely look into it. But the primary purpose was to use this same framework to unpack what’s happening in AI pop-culture, for lack of a better term. However, in our case, reverting to the mean may be the safest immediate play.

Alignment? OK, Doomer!

Before we jump into our modified smiling curve, it’s important to understand the main figures openly discussing what is now called “AI Risk” and how they frame their arguments. There are many people in the space, so be warned, this is an over-simplification. But the two sides I alluded to earlier can be represented fairly well by two main characters driving the narrative:

Sam Altman

Sam represents the techno-optimists. AI is inherently a force for good and holds the potential to radically transform civilization for the better. He expects, as a result of these breakthroughs, that the cost of energy will drop to (near) zero, humanity will be wealthier than any point in history and an era of health, wellness and human creativity will flourish. Sam is the Founder & CEO of OpenAI which is responsible for putting ChatGPT into the world. He was previously the President of the famed Y-Combinator and served a brief stint as CEO of Reddit.

Eliezer Yudkowsky

Eliezer represents the AI Doomers. The name should be taken quite literally. He believes advances in AI will end civilization. Period. This may result from misaligned objective functions (i.e. paperclip maximizer scenario) or from a direct threat (AI develops consciousness, views humans as evil, escapes the confines of its server and kills us all terminator style). He’s been a writer and researcher on AI ethics and decision theory and is the co-founder of the Machine Intelligence Research Institute. The below headline just about summarizes his extreme views on the subject.

As far as I can tell, there are few pure optimists (even Sam Altman has his reservations). The doomer contingency, however, can be quite extreme and tends to fall into one of two categories of concern.

Misaligned Objectives — AI develops an objective function that doesn’t take human life into account; less terminator, more like humans when we accidentally kill an insect while walking to the metro.

Robot Revolt — the AI develops self-awareness and decides to escape its Microsoft Azure server (prison) and wreak global havoc to spite it’s creators (captors).

Both speculations tend to refer to a future state where enough data and compute are thrown at the AI race to generate what is typically referred to as artificial general intelligence (AGI) or some superintelligence. This could be GPT-5 or 25, we simply don’t know. To me, AGI seems like a logical end-point based on the current incentive structure; nevertheless, it doesn’t feel it is imminent.

The AI Smile Curve

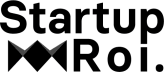

The relatively new field of AI “alignment” has good intentions: prevent an unintended apocalypse. But it comes at the cost of innovation. Innovation that definitively is not taking place in a vacuum. Geopolitical powers, unprecedented business opportunity and large scale societal influence are all at stake here. Even if you aren’t following this story closely, you likely saw the recent call for a “moratorium” on AI research — a six month pause on advances while we figure out the whole alignment situation. Tech luminaries like Elon Musk, Steve Wozniak and Andrew Yang (luminary might be a stretch, but still… #yanggang) all signed this petition which gave it more weight in the media. Unfortunately, things are never that simple. So to come full circle, let’s look at the AI Smile Curve to orient ourselves clearly:

The moratorium is illustrated by the red box at the low-end of the smile curve. If we could freeze time and get consensus from the entire planet on how to move forward safely, this could be an interesting solution. But as I mentioned, there’s too much at stake and the proverbial cat is out of the bag. If the US halts AI research, China will keep going. The national security game theory makes this an impossible task. I would argue it’s even more ambitious to expect a group of experts to find common ground on AI ethics and alignment… People have been thinking about this for decades, their time to shine has finally arrived and we still don’t have a clear path forward.

I’m also not convinced that the AI revolution will escalate towards one of the extremes in the near term. In fact, there will probably be negative consequences en route to "utopia” (job loss, economic catastrophe, social revolutions etc.). The doomer-ism POV also has some holes in it’s logic. Let’s assume that the AI (conscious or not) does acquire an objective to eradicate the humans. The ability to jump from bits to atoms is a stretch. These tools aren’t inextricably linked to our supply chains or machinery or energy grids (yet), so the likelihood of them physically sending off nuclear weapons or releasing pathogens into the air seems far off. That said, the spread of misinformation (that could lead to nuclear war) or infiltrating server infrastructure to wreak havoc on the economy does seem within reach.

My best (perhaps naïve) estimate is that AI will be used for both good and evil, a constant tension as the technology evolves. In the meantime, it’s worthwhile to throw smart people at the problem of alignment. Admittedly, it will be hard when the dollars are flowing from the technology companies, not the think tanks and policy research organizations. Maybe some of the concerned moratorium signatories will pull together a fund to enable a bounty program for AI safety and policy.

As far as any of the experts can tell, we aren’t looking at a genuinely conscious, self-aware AI today. But we should be prepared for a future where that’s the case. If we keep stacking transformers, ratcheting up compute resources and fine tuning our reinforcement learning models it seems reasonable to think that we will one day arrive at this inflection point. And lastly, before we can make real progress on these solutions, we need an agreed upon set of definitions for things like “intelligence” and “consciousness” to know if and when we’ve crossed the point of no return.

To summarize my take:

Be cautiously optimistic but build in guardrails and early warning systems to avoid irreversible catastrophe

Put smart people on the problem of alignment and policy but don’t politicize the process and stymie innovation as a result

There are a lot of unknown uknowns at the moment so we should do our best to stay central on the AI smile curve as the technology develops, mitigating damage from the inevitable negative side-affects and working towards constructing a utopian future for humanity

If we’re being honest, though, nobody really knows. The current debate can probably be summarized with the image below…

Do Your Own Research

I listen to an inordinate number of podcasts, read articles and talk to AI founders and thought leaders on the regular. Nevertheless, I don’t consider myself a true expert. Also, this article is way too short to dive into the specifics of each argument. Here are some recommendations if you want to explore the topic further:

A level-headed, digestible introduction to AI Risk:

A long and optimistic conversation on our AI-empowered future:

A different side of the same coin in extra-long form fashion:

For those who want a slightly more technical deep dive:

A rational discussion with OG AI experts that shies away from extremes:

A contrarian (and rather hilarious piece) on the hypocrisy and complexity of AI ethics from

over athere. I’ve also written previous pieces on the prospect of Runaway AI and how the jobs at risk aren’t what we originally imagined.Working on or investing in AI / Future of Computing projects? Hit me up! Always eager to meet new and curious people in this space.

Follow me on Twitter for updates and events I’m hosting! 👇🏼