🤗 Hugging Face & The Race Against Runaway AI

The most ambitious AI Project You've Never Heard Of

Welcome to Startup ROI, where we explore global technology trends and how they manifest themselves in France 🇫🇷 . Whether you're an entrepreneur, investor or tech enthusiast, I'm glad to have you here!

Want to sponsor or collaborate? 📩 bonjour@startup-roi.com

Join me weekly for in-depth analysis on French Tech:

Pssst, you can listen too:

New logo, who dis?

I figured it was about time I upgraded my lopsided, Microsoft paint-looking crown logo to something more representative of the brand today. We've got a new type face, sleek design & multiple colors for coordinating all the excellent (read: eccentric) graphics I plan to create this year. Tell me what you think!

Startups with pitch decks claiming their product leverages artificial intelligence attract 15% to 50% more in their funding rounds than other technology startups. This is not a coincidence – although perhaps a brazen display of fake it till you make it culture in the tech sector. Artificial intelligence (heretofore, "AI"), once the unthinkable theoretical musings of early 20th century futurists, is now achievable. Achievable is an understatement. It's conceivable that every one of us interacts daily with some form of artificial intelligence, knowingly or not. The breakneck pace at which the research is developing and bleeding into commercial use is fascinating to some and worrisome to others. Intrinsically, the outcomes should be net-positive, right? Who doesn't want smarter software, better algorithms, and pure customer delight? In its current state, it's unlikely we'll be bowing down to our superior, silicon-based overlords. But the foundational work taking place today sets the stage for tremendous – potentially dangerous if unchecked – progress.

TL;DR (AI-Assisted Summary, just kidding)

The term "artificial intelligence" is tossed around loosely, but describes an intelligent algorithm trained through machine learning techniques like neural networks & deep learning

There are basically two types: weak and strong AI; most everything you encounter today is considered definitionally weak although we are edging closer to strong algorithms daily

Natural Language Processing (NLP) is one of the fastest-growing subsets of AI Research due to its vast number of practical applications; however, ethical implications are making fundamental advances slightly less palatable to the general public

Hugging Face, a French-founded, Brooklyn-based startup has cornered the AI developer community through a comprehensive repository of state-of-the-art models, allowing anyone to leverage cutting edge AI with a few lines of code

They have also pioneered an ambitious, global research project aimed at evaluating the inherent risks of ever-advancing AI

The current research landscape, barriers to entry, and misaligned incentives have led to sketchy behavior from "big tech" (including the firing of an Ethical AI co-lead at Google)

The fate of AI research and accessibility (and dare I say, humanity?) might just be in the hands of this cute little company with an emoji as its namesake

Oh, So You Passed the Turing Test?

We'll attend to the doomsday prophecies momentarily. But first, I think we should level-set on the term AI, the basics of how it works, and finally the mechanics of dissemination. Artificial intelligence is the umbrella term, often used synonymously (and mistakenly) with training methodologies used to develop AI with the help of enormous data sets.

There are three general categories of AI, associated primarily with the strength of the algorithm:

Artificial Narrow Intelligence (ANI)

Artificial General Intelligence (AGI)

Artificial Super Intelligence (ASI)

The first, ANI, is the classification we are all accustomed to today. This classification is deemed "weak" AI and is typically attuned to a specific task: winning a chess match or identifying a single person among a set of photos. More advanced tools like chatbots or virtual assistants (Alexa, Siri) start to brush up on the next category, but are still considered narrow. To reach AGI, the algorithm needs to operate on par with human intelligence; logically, ASI would imply beyond human intelligence. Think HBO's Westworld or some eerie Ex Machina vibes. Turing complete androids with god-like powers. Cool but scary.

Though we haven't crossed the threshold yet, the advances within just the narrow category in the past decade are startling. So, how did we get here? The answer: machine learning. Machine learning (colloquially referred to as ML) is a technique in which you train an algorithm on a data set in either a supervised or unsupervised manner. Typically, this requires lots of manual data tagging and regular tweaks to the weights associated with input parameters in the algorithm. That may sound simple until you realize that some of these algorithms have hundreds of millions, billions or in one case even a trillion parameters. The most common ML training methodology is called a neural network, a process that mimics the way your brain processes information. Any model that leverages more than three neural networks is considered deep learning. Today, a very popular deep learning model is called a transformer. Don't worry, we went from 30,000 feet down into the weeds pretty quick there, but we won't go much further – stay with me!

A transformer is a deep learning model that adopts the mechanism of self-attention, differentially weighting the significance of each part of the input data. It is used primarily in the field of natural language processing (NLP) and in computer vision (CV). Great explanation via YouTube here.

In essence, the objective of AI is to augment machines with the capability to think. Once you've trained up a model, it should be able to perform a task with a high degree of reliability and accuracy. It's the degree of difficulty that's changing. Beating Kasparov at Chess is one thing, but driving a car autonomously or making national security decisions independently has entirely different implications. Part of the jump from rote, mechanical tasks to advanced use-cases like visually identifying objects or understanding innuendo is thanks to two sub-categories of AI research: computer vision (CV) and natural language processing (NLP).

That's it! These are the definitions you'll need to advance to the next stage of this article. Congratulations, you've passed the test.

You down with NLP? Yeah, you know me!

Our main story today concerns advances in natural language processing and the fate of this technology's evolution. As you can imagine, having a solid grasp on language is a big step forward for artificial general intelligence. We don't just want robots that can talk back, but synthesize information, develop opinions and recommendations, maybe even empathize with us lowly humans. That's a tall order, but leading AI researchers are breaking barriers regularly. But unlike many previous technological breakthroughs, AI research has been conducted behind closed doors at the largest and wealthiest private companies around the world. This has both accelerated progress (afterall, it's quite resource intensive) and drawn ire from the broader academic, public and open-source communities that were all instrumental in paradigm shifting developments of the past – ever heard of the internet?

The average person likely assumes that AI is simply everywhere, that anyone can do it. And while in some cases that's true (enterprise software companies baking in some lightweight AI into their chatbot – let's call it dumb AI), it's actually really, really, really hard to develop a foundational model. It's also not possible to just copy & paste said model into your source code and voila, your software is AI-enabled. You might be surprised to learn that a lot of coding is just googling how other people did something, taking their code repository and tweaking it to apply it to your own use-case. In fact, this aversion to re-inventing the wheel is why developer tools like Github were invented (sold to MSFT for $7.5B in 2018). In fact, a tendency towards open architecture and application programming interfaces (APIs) to enable interoperability and re-usable components became the norm in the era of cloud computing. This process has matured over the decades such that developers don't think twice to search around for a for loop they already know exists out in the ether. But the AI developer ecosystem is still emerging and access to information (and understanding) isn't evenly distributed. So how does it work? Here's a typical scenario:

Google creates some new foundational AI-model for NLP

A dense, academic style paper gets published *mystery & intrigue ensues*

The lead researcher publishes their model (code) in some hidden corner of the internet (or distributes to a group of other leading AI scientists)

Those with access start playing with the model, tweaking the weights, or applying it to a new use-case; maybe they add some documentation on how it works

These early applications get published in some subreddit for AI geeks and gets picked up by a few hackers or leading professionals

Eventually, it disseminates throughout the close-knit AI developer community and experiments with results start to circulate

But then… the original model gets upgraded to version 2, all your code breaks and the cycle starts again because you need that sweet, sweet upgrade!

I'm generalizing here, but the gist of it is this: there's a trickle down effect from the top AI institutions of the world (mostly big tech companies) to the curious indie AI researchers and internet sleuths willing to put in the time to piece together the puzzle. Not exactly the most efficient process for a group that prides itself on minification.

So we've got an efficiency problem on our hands. In my experience, inefficiency tends to present opportunity. And that's exactly where Hugging Face made their move. Hugging Face describes itself as "The AI Community Building the Future" that enables developers to build, train and deploy state of the art models powered by the reference open source in machine learning. In essence, they've taken the dirty work out of sorting through documentation, productized it so that you can access advanced models with just a few lines of code, and perhaps most importantly, have developed a community of thoughtful, intelligent, open source-savvy AI developers. The co-founder and lead scientist, Thomas Wolf, is a French computer scientist (they still have an office in Paris). The HQ is now in Brooklyn and just last year they raised a $40M Series B round.

Walled Gardens Tend to Harbor Snakes

Problem solved, right? Not exactly. From an operational standpoint, Hugging Face has introduced an invaluable service to AI engineers worldwide (I've spoken to some, they love it!). But as I mentioned before, it's a select few organizations that have the talent, funding and resources to create these algorithms in the first place. And that presents a variety of ethical problems. As you may have guessed, it's the usual suspects playing in this arena:

When I say the barriers to entry are high, I'm not joking. Have you ever heard of a petaflop? I hadn't either. For the record, it's one quadrillion flops. At first, I thought this might be related to our old friend the floppy disk (ubiquitous external storage device in the 90s with ~1.4MB of storage) but no such luck. A flop is a unit of computation, what's called a floating point operation. So for example, if a mathematical operation like 3.5 x 9.7 takes 1 second to compute, that would be considered 1 flop. A petaflop can do 1000 trillion multiplications per second. Sheeesh!

"GPT-3 has 175 billion parameters—10 times more than its predecessor, GPT-2. But GPT-3 is dwarfed by the class of 2021. Jurassic-1, a commercially available large language model launched by US startup AI21 Labs in September, edged out GPT-3 with 178 billion parameters. Gopher, a new model released by DeepMind in December, has 280 billion parameters. Megatron-Turing NLG has 530 billion. Google’s Switch-Transformer and GLaM models have one and 1.2 trillion parameters, respectively."

— MIT Technology Review

What I'm saying here is that building a cutting edge AI model on your own today is like a farmer trying to launch a rocket to the moon from his grain silo in the 60s: it ain't happenin'. Even with the talent and the money you need vast amounts of computing power. It boggles the mind. So what if "big tech" has a monopoly on novel AI? Well, there are a few implications, two that are particularly important:

Bias: with a small subset of the population determining what data the model gets trained on it could have adverse effects on the broader population (namely, minority groups). That could range from a racist chatbot to a lag in advancements in less common/dominant languages like Swahili, for instance.

Runaway AI: This might sound hyperbolic at first, but there is a genuine concern that we may progress on AI research too fast. So fast, in fact, that it gets out of our control (and without the general consensus of the public). There are simply so many unknowns regarding artificial general or superintelligence that outcomes could range from some sort of tech utopia where the robots live to serve us or the more probable version where human interests to super intelligent robots is akin to our concern with the livelihood of an ant crawling on the ground in front of us: we aren't out to destroy them, but we're fairly indifferent to their existence.

These are two crucial topics that need to be confronted head-on if we want to mitigate experiences ranging from inconvenient and offensive to life-altering or even extinction-inducing. I don't mean to be alarmist here. In fact, people much smarter than me have vouched for oversight and research into these challenges. Though it might sound like a ways off, Google's injection of deep neural networks into their Translate tool literally changed performance overnight (2016). If we're sitting at the cusp of GAI, when the dam breaks, it will be hard to reverse. There are varying opinions here, the starkest contrast might be that between Elon Musk (concerned) vs. Jack Ma (laughably nonchalant) in their debate at the 2019 World AI Conference.

The good news is that our friends at Hugging Face have once again stepped in to provide a potential solution. It's called the BigScience project and it's a decentralized, open-source research consortium on large, multilingual models and datasets. The year-long effort can be summarized as follows, from their site:

“During one-year, from May 2021 to May 2022, 600 researchers from 50 countries and more than 250 institutions are creating together a very large multilingual neural network language model and a very large multilingual text dataset on the 28 petaflops Jean Zay (IDRIS) supercomputer located near Paris, France.

During the workshop, the participants plan to investigate the dataset and the model from all angles: bias, social impact, capabilities, limitations, ethics, potential improvements, specific domain performances, carbon impact, general AI/cognitive research landscape.”

The scope of the project is enormous. Just browse through their public Notion site and it's clear that (a) this will take extraordinary leadership and discipline and (b) there are a lot of good, smart people in the world willing to get involved *sigh of relief*. BigScience is structured by "working groups," each with their own objective and led by co-chairs who steward the effort. Examples include:

Challenges in the biomedical domain

Carbon footprint

Legal and Ethical Scholarship

Tokenization

Architecture & Scaling

The project is so expansive that there's even a working group dedicated to organizing the working groups! Their public documentation is fascinating – at times dense – and informative. There are several papers/artifacts already available for public consumption. The project was modeled off CERN's Large Hadron Supercollider (LHS), the world's largest high energy particle accelerator. It too is a multinational effort requiring massive coordination in the name of science. In April of last year, the group was granted access to use the French government's Jean Zay supercomputer to build the first open-source Large Language Model (LLM) that would facilitate independent research (i.e. chip away at the barrier to entry). So freakin' cool!

Zeroes & Ones

Hugging Face and the BigScience project aren't a cure all for our AI woes. But it's reassuring to know there's an accountable body of extremely capable people working to ensure safe, intentional and accessible innovation in the space. My aim here isn't to demonize the work (or people) coming out of Big Tech's walled garden of AI. It's merely an effort to be scientifically skeptical of anything, especially powerful new technology that is driven and protected by profit-seeking corporations. There's an awful lot of out of the box convenience associated with these innovations that deliver delightful experiences to consumers and keep the engines of revenue turning at massive tech companies. I love using my Google Home to get an answer or turn off a light; I was pleasantly surprised when, at US Customs, the Global Entry screen no longer needed my finger prints but recognized me from a grainy photo (not to mention disheveled and sleepy!); it's wildly entertaining to watch Tik-Tok deep fakes of Tom Cruise doing magic tricks. But remember there are trade-offs. Deep fake tech is used to create revenge porn that ruins lives; China has infamously instituted a social credit system leveraging a vast network of cameras paired with powerful AI to rank it's citizens based on their actions; the information gleaned from every interaction with your smart speaker will build a database of information enabling an algorithm to know you better than you know yourself.

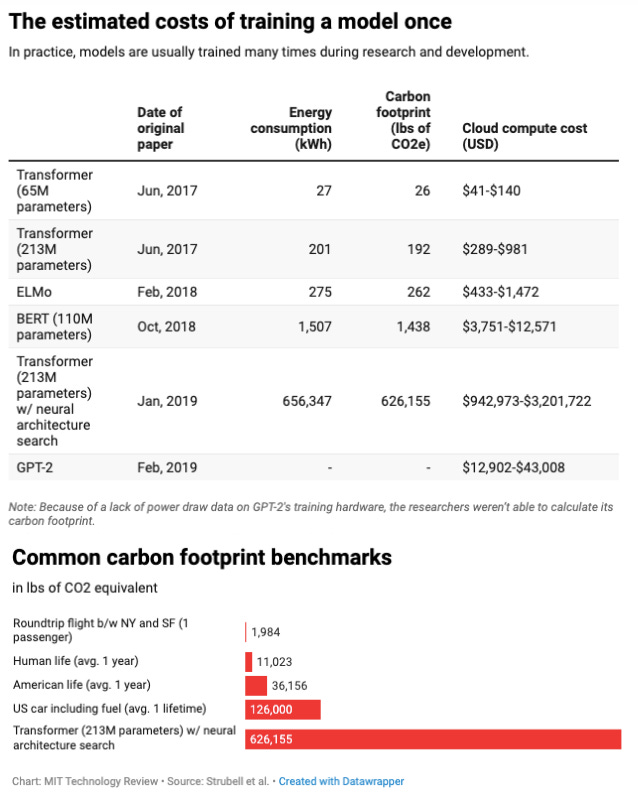

I like to give the benefit of the doubt to the world's leading innovators; nevertheless, there are troubling behaviors exhibited by even the world's most prominent (and loved) brands. Google forced out Timnit Gebru, the co-lead of their Ethical AI Team, after her research highlighted concerns around large scale AI models and their implications on race, climate, and the overall information landscape. For example, as NLP models begin to exert their influence in generating and moderating content, the misinformation flywheel could start to spin faster and faster. These types of repercussions will only serve to amplify the already noisy information space we inhabit today. Cost structure and C02 emissions rank high on the list of concerns as well (although this debate has been simmering for a while in the Blockchain world with mixed conclusions).

There isn't a definitive beginning and end to each phase of progression in AI research. We are simply here. And at some point, we'll be there. I'm super excited about the potential in this sector, but simultaneously hope to err on the side of caution as we peer out over the abyss. Companies like Hugging Face that deliver an amazing product to their customers AND foster open-source, research-driven communities as an antidote to runaway technology come few and far between. So let's celebrate this era of machine learning and the champions for positive progress with a silly yet approachable emoji: 🤗 !

Special thanks to Jeff Kiske, my ML super-star and best friend since 6th grade!