Welcome to Startup ROI, where I, Kyle O'Brien, share European Tech insights from my perch in Paris, France. You can expect to find articles covering startups & ecosystem trends, interviews with founders and investors, as well as updates on in-person events for community thought leaders.

Want to sponsor or collaborate? 📩 bonjour@startup-roi.com

Join hundreds of fellow tech enthusiasts & follow for in-depth analysis:

Unnecessary Prologue

Every so often, I take a look at one of my cover images and think: maybe I should have been a graphic designer… What a sight to behold! Today's article has nothing to do with young Tom Cruise, nor an attractive woman posing seductively on an 80s sports car. This just happens to be the first place my mind goes when thinking of deep tech related puns. You can thank me later.

Actual Prologue

If not Tom Cruise playing air guitar in tighty whities, what are we here to discuss today? A related topic: instruction set architecture. See, I knew if I put that in the headline your eyes would glaze over and you'd move onto the next newsletter in your inbox. Or worse: unsubscribe. I didn't have a choice, I had to hook you with some cinematic nostalgia. But now that you're here, let's get into it.

Many of you are probably familiar with Moore's Law: the number of transistors on a microchip doubles roughly every two years. Named after Gordon Moore, co-Founder of Intel, the approximation has largely held true since the early 70s. It became such a paradigm in the semiconductor industry that we aren't really sure if the industry eventually just adapted to fit the curve of our expectations over decades. Nevertheless, it became gospel and it heavily influences analyst predictions around computing power and the technological future we often aim to forecast. But there's a problem…

The problem is physics. For decades we've made strides in implementing smaller and smaller transistors on a microchip at a seemingly endless race towards cheaper and better computing. As a result, it's easy to forget that there are physical limitations to miniaturization of our hardware. Which is to say, you can't get much smaller than the width of an atom — a limit we are rapidly approaching. So what happens when we inevitably hit the physical barriers our universe has so cruelly imposed on us? What happens if Moore's Law suddenly tapers off, runs into a horizontal asymptote after decades of its linear progression? Likely what always happens: we find another path, we build a new technology or we die trying (OK, a little melodramatic, I agree).

Not taking into account the geopolitics of chip fabrication, silicon production and state IP — we have ourselves a physics problem. One solution, namely quantum computing (an area we will explore further in the near future) might return us to the path of computing prosperity (faster, better, cheaper). Even so, quantum machines will require traditional silicon computers to interface with, at least in the near term. So we have to think more practically. We have to work with what we have. In fact, what we have is pretty gosh dang good. Yet the ever-changing landscape of computing, heavy concentration and barriers to entry make for a fragile innovation space and put a moratorium on creativity. There happens to be something rebellious and ready to unlock changes we haven't witnessed in decades. That something is… Tom Cruise. Kidding, it's RISC-V!

Following Instructions

As usual, to make sense of today's main topic, we have to do a quick history lesson. After all, RISC-V (pron: risk five), implies there was a RISC 1-2-3 and 4… And frankly, that's just the tip of the iceberg here. We've talked about the concept of abstraction before — what you see when you interact with your MacBook or iPhone is quite a few steps away from the actual processing taking place on the machine itself.

Unless you're a developer, you've probably only experienced the application layer of the stack (see chart below). Even most developers hit their limits at the third layer, the programming language. If you're a full stack or hardware engineer, you may move progressively lower on this diagram. There are relatively few people who actually understand the lower half, and shockingly few who understand the entire stack. Computing has become such a massive industry over the decades that specialization is required. As a result, standardized protocols are crucial to keep the industry moving at pace. Generally speaking, this is a good thing — but we'll explore in a moment how it presents problems at particular inflection points in the arc of technological progress.

As illustrated in the diagram, the Instruction Set Architecture (heretofore, ISA) behaves like a membrane bridging the gap between human-readable programming languages to machine code i.e. binary. Without going into an entry level computer science course, the ISA is responsible for compiling programming instructions into binary and executing actions on the hardware itself — like running a computation or storing data etc. The below diagram shows the breakdown of these binary strings. Opcode tells the machine what to do and the addressing mode says where to do it.

Now that we know the basic function, it begs the question: what makes a good ISA?

Programmability: In ancient times (the '70s), humans were the compilers (read: not efficient). Modern compilers work on lower level languages and fine-grain instructions help to optimize the process.

Implementability: Implementations for different products will inherently be different. The key is flexibility in implementation so that you can optimize for things like low-power, high reliability or cost. Example: mobile phone designers might be more interested in power consumption for battery life whereas a supercomputer facility prioritizes reliability.

Compatibility: The architecture needs to accommodate previous programmability and consider future evolutions of technology. Imagine if every time you updated your iPhone all your apps broke… Forward/backward compatibility are critical here too.

In the Wild West days of computing, you can see how a lack of standards would make for a messy path to building good products. Instruction sets varied greatly and issuing changes in your product might rely on a quirky implementation from Gary, the previous engineer who worked on your hot new scientific calculator product. Gary is gone now and so is his “IP” that made the damn thing work. There is a laundry list of ISAs but really only two that achieved mass adoption in today's world of abundant compute:

Intel's X86

ARM's RISC Architecture(s)

*this is an oversimplification, but the majority of today's chip architecture is powered by Intel X86 or some design from ARM. There are many purpose-specific variations of CISC (complex instruction set computer) and RISC (reduced instruction set computer) architectures that align with these standards.

DisARMing the Rebels

The other day I found myself reading the archives of Anna-Sofia Lesiv, who writes some fantastic pieces for Contrary Capital. In her most recent personal essay titled “Criticizing Computers,” I found a quote she included that resonated quite well with this piece:

As I stated earlier, the full stack, as it were, is enormously complicated in today's computing environment. The hardware people don't know the middleware people who don't know the software people who don't know the people making the physical chips. This lack of collaboration across disciplines is a primary driver for global standards. If everyone is in a race towards superior computing but constrained to their own lane, at the very least we need each cross-section of the stack to communicate properly. Obviously it would be better if there was a clear line of communication across disciplines but cooperation is hard and there are market demands to be met.

What am I getting at? The ISA standards that won the hearts and minds of the computer industry early on have calcified into a rigid structure that sits at the intersection of hardware and software and the progress we expect to emerge in the field. The two aforementioned ISA providers have a stranglehold on the market for chip architecture. Intel, an obvious choice, due to their longstanding presence and leadership in the computing industry. But the second winner is lesser known (to the public) and more unusual…

ARM is a fascinating company for a number of reasons. Not least of which is the fact that they are the biggest chip company that has never actually built a chip. ARM is what's called a semiconductor design company, which means they design chip architecture, patent it and then license the intellectual property to chip manufacturers, OEMs, big tech companies or whoever wants to leverage their designs for custom chips. They are like the Uber of semiconductor design — powering the ecosystem with their tech but owning none of the capital infrastructure. Better yet, maybe they're more like the NYC Taxi Medallion.

The company was founded in 1990 in Cambridge, UK and one of the reasons they've been so successful is because they serve as the glue to hold the ecosystem together. All those disparate disciplines don't have to worry so much about cooperation — ARM handles it by setting the standards, fostering ecosystem development and everybody wins. In ARM's case, they've won big. Some crazy stats:

ARM's current market share is limited to around 13.1% of PC client processors — Source: Digital Trends

By 2026, Canalys estimates that up to 30% of PCs will be ARM-based while half of the CPUs used for cloud services will rely on ARM architecture — Source: Digital Trends

Arm reported its silicon partners shipped in the prior quarter a record 6.7 billion Arm-based chips, which equates to ~842 chips shipped per second — Source: ARM (2020)

They sit at such a critical point in the supply chain that they've historically had a foundation-like status promising to be the arbiter of chip architecture, a relatively neutral party and to remain private and independent. That has changed since Softbank acquired them and then tried to sell them to Nvidia (a fairly public failure). There are now discussions of taking the company public. To learn more about the story I highly recommend the podcast below with their new CEO:

ARM can largely be credited as an industry leader helping to usher in the previous 30 years of computing progress. The changes from desktop to mobile to cloud computing, the rise of application specific chips (ASICs) and graphical processing units (GPUs) and even the burgeoning neural processing units (NPUs) contributing to the explosion of AI/ML can in some way be attributed to the ARM designs and ecosystem development. However, there are consequences to this rapid consolidation. Namely, pricing power and stymied innovation. ARM isn't quite a monopoly, but monopolistic externalities are at play. And they are becoming problematic as we push into the next wave of the computing industry.

No RISC, No Reward

Licensing ARM designs is exorbitantly expensive. On the order of tens of millions of dollars for something off-the-shelf. If you want an architecture license, meaning you can take their design and iterate upon it, it costs even more. For a company to go out and make a custom chip (with licensing fees, design work, prototype, production & testing) it can cost upwards of $100M. A great example of this is Apple and the M1 chip — they said screw Intel, we'll make something better that's optimized for our MacBooks and our user base (plus entirely vertically integrate their product suite). Where'd they turn? ARM. But that's only because they're Apple. They can afford it. For a young startup looking to disrupt the industry, the barrier to entry is simply too high.

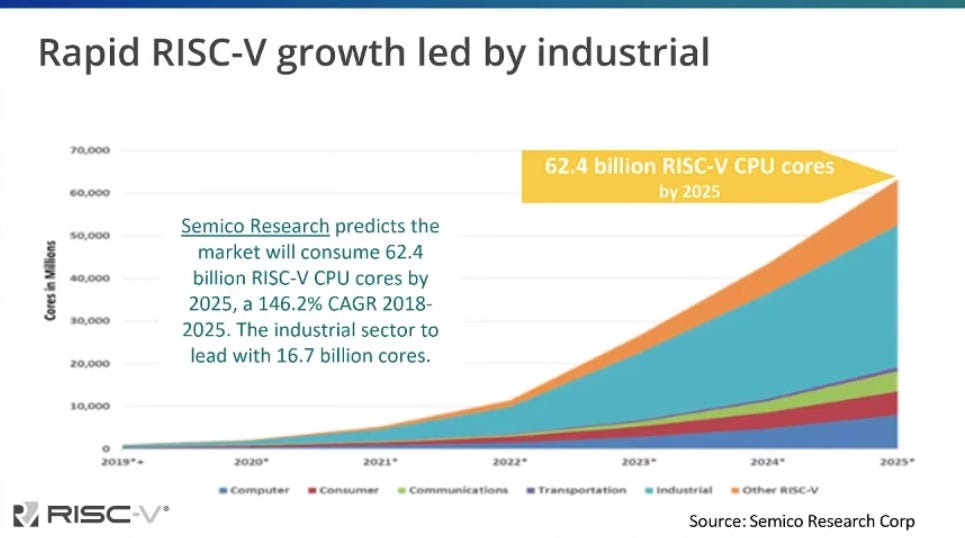

Enter RISC-V. The solution was proposed at UC Berkeley in 2010 and a white paper later released as an open-source alternative to ARM designs. You can imagine the potential threat here to the current paradigm. Affordable designs built through an open-source community could be a game changer. Especially now that we are at a tipping point for novel computing. Modern computers don't contain just simple, off-the-shelf CPUs. Bandwidth and networking capabilities have brought about the Internet of Things (IoT), Industrial Applications, Automotive use-cases, and of course increased complexity to consumer devices and cloud data centers. As the landscape for compute evolves, we need a requisite evolution in chip innovation to keep pace. There are probably thousands of startup ideas that have been shelved simply because of the cost of entry. The promise of RISC-V is an open standard that enables anyone to build instructions for Systems on a Chip (SOCs) that will power the devices of tomorrow.

Since its inception, it's taken hold fairly rapidly. Of course, they have their work cut out for them. Ecosystem development is a critical role at ARM and will very much be at play for RISC-V. The RISC-V International foundation appears to be cultivating that ecosystem and gaining traction. In fact, even Intel became a sponsor of the project last year! The next generation of computing has so much potential — advances in AI/ML, qauntum, VR/AR and technologies we are yet to encounter will need a supportive and innovative chip/hardware community to power these advances.

An ARM and a Leg

Where does this leave us? I don't want to leave you with the impression that ARM = BAD, RISC-V = GOOD. That's not my argument. Frankly, this feels to me like the natural progression in the business cycle. Consolidation leads to decentralization. New technologies introduce new requirements and attract new innovators. Think about it this way: can you imagine if HTTPS was owned by one (or a few) dominant organizations? Anyone who wanted to build a website and connect it to the internet would need to pay a licensing fee to this central authority and there was effectively no way around it. The internet wouldn't look like it does today if all the critical protocols weren't carefully overseen by independent governing bodies, non-profits and benevolent hackers.

Why shouldn't that be the case with hardware as we breach an era of exponential compute?

Are you working on projects in/around the RISC-V ecosystem?

Are you a passionate founder or investor exploring opportunities in next generation computing?

Are you an industry expert eager to share your perspective on this domain?

📩 Hit me up, let's chat → kyle@unit.vc

🤓 Looking for deeper insights on ISAs & RISC?

Intro to Computer Architecture at UPenn — Lecture

Instruction Set Architectures — NerdFirst (YouTube)